Science behind Deep-Image.ai

Big data, artificial intelligence, machine learning etc… we all heard these words multiple times in many contexts. Everything should be easily automated and put into correct use. The idea behind Deep-image.ai is kind of similar — to make our lives easier in terms of upscale and image enhancement.

How does it really happen, though? What’s behind these “big words”?

It all starts with data… (of course)

Each graphic file is a matrix and a set of data stored in it (numbers — pixels). After enlarging the image, the amount of data does not increase, so the image obtained has visually worse quality. By default, filtering techniques e.g. bicubic interpolation (using Photoshop or other tools), are used to improve the value. Meanwhile, in Deep-Image.ai, thanks to the use of machine learning, we get a larger image with a much better quality compared to bicubic interpolation. By using the super-resolution (SR) technique the application reconstructs the image, or sequence with a higher resolution, from the low resolution (LR) images.

Neurons ready and in action

The core of the application is a CNN or ConvNet. In machine learning, it is a class of convolutional neural networks, which are successfully used for image analysis. CNNs are designed to require minimal pre-processing, compared to other image classification algorithms. It means that the network learns the filters that were manually developed in traditional algorithms.

The convolutional networks are based on biological processes, modeled on human neurons. In Deep image.ai networks are developing. The more examples they get (they analyze more data), the more intelligent the application will be. The network learns that the line on the graphic can not have sharp edges and should be smooth — more options for smoothing, the better the quality of output file is.

Following an intriguing pattern

Working with neural networks does not look like a standard programming process. More like science, based on showing the pattern. Check this:

- in Deep-Image.ai on the input we have low-resolution graphics, while on the output we have to get a high resolution. At the beginning of cooperation with the neural network, we set random parameters

- From the moment of entry, the neural network learns how to create good quality graphics with the help of various transformations

- The network counts an error by analyzing the difference between the input (the starting image) and the output (the final image). Then it modifies the weights so that the difference between successive exits is as small as possible.

- The learning and creation process is based on algorithms (filter sets). Neural network assimilates information about a given edge so that in the end the line is smooth.

Application operation is based on the iterative process, which brings the final graphics almost to perfection. Everything happens automatically through a framework that is used in Deep-image.ai - Keras (high-level API written in Python for the tangled neural networks TensorFlow and Theano).

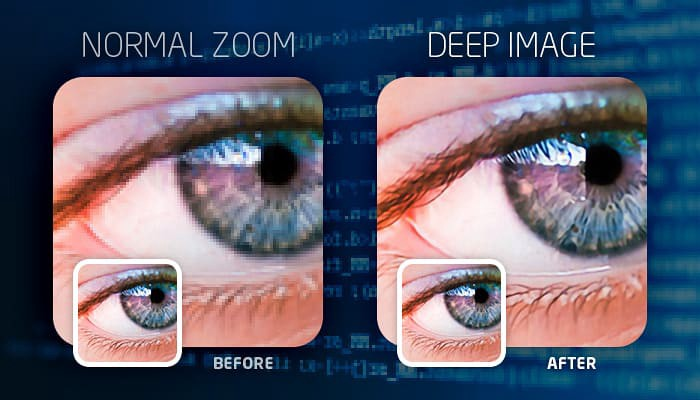

That is how we can achieve such amazing results:

Let us know in case you have any comments or questions — always happy to share some knowledge. Remember, you can always upscale photos using deep-image.ai